7.2 Language

Learning Objectives

By the end of this section, you will be able to:

- Define language and demonstrate familiarity with the components of language

- Understand how the use of language develops

- Explain the relationship between language and thinking

Language is a communication system that involves using words and systematic rules to organize those words to transmit information from one individual to another. Along with music, language is one of the most common and universal features of human culture and society. While language is a form of communication, not all communication is language. Many species communicate with one another through their postures, movements, odors, or vocalizations. This communication is crucial for species that need to interact and develop social relationships with their conspecifics. However, many people have asserted that it is language that makes humans unique among all of the animal species (Corballis & Suddendorf, 2007; Tomasello & Rakoczy, 2003). This section will focus on what distinguishes language as a special form of communication, how the use of language develops, and how language affects the way we think.

COMPONENTS OF LANGUAGE

Language, be it spoken, signed, or written, has specific components: a lexicon and grammar. Lexicon refers to the words of a given language. Thus, lexicon is a language’s vocabulary. Grammar refers to the set of rules that are used to convey meaning through the use of the lexicon (Fernández & Cairns, 2011). For instance, English grammar dictates that most verbs receive an “-ed” at the end to indicate past tense. English is mainly a subject verb object (SVO) language where the subject comes first, followed by the verb, and then the object of the statement as in the english sentence “The boy eats the apple”. Standard Mandarin is an SVO language although for simple sentences with clear context Mandarin can be flexible by using SOV or OSV organization. Dutch and German are also SVO languages in conventional sentences, but SOV when the noun class “that” or “who” are used (“dat” or “wie” in Dutch and “das” or “wer” in German). For example, in Dutch, a basic sentence such as “Ik zeg iets over Ben” (“I say something about Ben”) is in SVO word order. However when the “das” or “wie” (“that” or “who”) is used the verb gets shifted to the end of the sentence and the sentence becomes SOV. An example of an SOV sentence in Dutch would be “Ik zeg dat Ben een riem gekocht heeft (“I say that Ben a belt bought has” which is difficult to understand in English). Although our familiar organization of SVO is widely used, among natural languages where word order is meaningful, SOV is the most common language type followed by SVO and these two language types account for more than 75% of the natural languages with preferred order (Crystal, 2004). Among the many SOV languages spoken throughout the world examples include Asian languages such as Ancient Greek, Hittite, Mongolian, Napali, Japanese, Korean, Turkish, Uzbek, European languages such as virtually all Caucasian languages (Indo-European), Sumerian, Sicilian, and many Native American and Native Mexican languages such as Cherokee, Dakota, Hopi, and Uto-Aztecan. There are very rare uses of SOV in English one example being the phrase “I thee wed” in commonly recited wedding vows “With this ring, I thee wed” (see Fischer, 1997 for an interesting history lesson on verbs related to marriage throughout history).

Phonemes or Cheremes as in the case of sign languages make up the sounds we use as building blocks for creating words. Words are formed by combining the various phonemes that make up meaning to be constructed within a given language. A phoneme (e.g., the sounds “ah” vs. “eh”) is a basic sound unit of a given language, and different languages have different sets of phonemes. Phonemes are combined to form morphemes, which are the smallest units of language that convey some type of meaning (e.g., “I” is both a phoneme and a morpheme). We use semantics and syntax to construct language. Semantics and syntax are part of a language’s grammar. Semantics refers to the process by which we derive meaning from morphemes and words. As a field of study, semantics has ties to many representational theories of meaning that are beyond the scope of the current chapter but include truth theories of meaning, coherence theories of meaning, and correspondence theories of meanings which are all generally related to the philosophical study of reality and the representation of meaning. Syntax refers to the the study of the combinatorics of units of language and how phonetic units organized into sentences without reference to meaning (Chomsky, 1965; Fernández & Cairns, 2011). The study of syntax examines sets of rules, principles and processes that govern the structure of sentences in a language including the order and arrangement of the pieces which make up a message.

We apply the rules of grammar to organize the lexicon in novel and creative ways, which allow us to communicate information about both concrete and abstract concepts. We can talk about our immediate and observable surroundings as well as the surface of unseen planets. We can share our innermost thoughts, our plans for the future, and debate the value of a college education. We can provide detailed instructions for cooking a meal, fixing a car, or building a fire. The flexibility that language provides to relay vastly different types of information is a property that makes language so distinct as a mode of communication among humans.

LANGUAGE UNIVERSALS

Obviously there are many differences between individual languages and language categories as discussed above in terms of SVO, SOV and OSV organizations. However there are many key features which are found in all languages that aid in discriminating between human language and animal communication. Hockett (1963) described one of the first published list of language universals proposing 13 features or characteristics that are common to all known languages also adding that only human language contains all 13 features whereas animal communication may contain some of these features but not all 13 (see below for the complete list). Many of the universals Hockett identified such as vocal-auditory channel and transitoriness also known as rapid firing, are not essential features of human language but were likely important for the overall evolution which lead to the current state of world languages (Radvansky & Ashcraft, 2014).

Hockett’s Linguistic Universals

LANGUAGE DEVELOPMENT

Given the remarkable complexity of a language, one might expect that mastering a language would be an especially arduous task; indeed, for those of us trying to learn a second language as adults, this might seem to be true. However, young children master language very quickly with relative ease. B. F. Skinner (1957) proposed that language is learned through reinforcement. Noam Chomsky (1965) criticized this behaviorist approach, asserting instead that the mechanisms underlying language acquisition are biologically determined. The use of language develops in the absence of formal instruction and appears to follow a very similar pattern in children from vastly different cultures and backgrounds. It would seem, therefore, that we are born with a biological predisposition to acquire a language (Chomsky, 1965; Fernández & Cairns, 2011). Moreover, it appears that there is a critical period for language acquisition, such that this proficiency at acquiring language is maximal early in life; generally, as people age, the ease with which they acquire and master new languages diminishes (Johnson & Newport, 1989; Lenneberg, 1967; Singleton, 1995).

Children begin to learn about language from a very early age (table below). In fact, it appears that this is occurring even before we are born. Newborns show preference for their mother’s voice and appear to be able to discriminate between the language spoken by their mother and other languages. Babies are also attuned to the languages being used around them and show preferences for videos of faces that are moving in synchrony with the audio of spoken language versus videos that do not synchronize with the audio (Blossom & Morgan, 2006; Pickens, 1994; Spelke & Cortelyou, 1981).

| Stage | Age | Developmental Language and Communication |

|---|---|---|

| 1 | 0–3 months | Reflexive communication |

| 2 | 3–8 months | Reflexive communication; interest in others |

| 3 | 8–13 months | Intentional communication; sociability |

| 4 | 12–18 months | First words |

| 5 | 18–24 months | Simple sentences of two words |

| 6 | 2–3 years | Sentences of three or more words |

| 7 | 3–5 years | Complex sentences; has conversations |

The Case of Genie

In the fall of 1970, a social worker in the Los Angeles area found a 13-year-old girl who was being raised in extremely neglectful and abusive conditions. The girl, who came to be known as Genie, had lived most of her life tied to a potty chair or confined to a crib in a small room that was kept closed with the curtains drawn. For a little over a decade, Genie had virtually no social interaction and no access to the outside world. As a result of these conditions, Genie was unable to stand up, chew solid food, or speak (Fromkin et al., 1974; Rymer, 1993). The police took Genie into protective custody.

Genie’s abilities improved dramatically following her removal from her abusive environment, and early on, it appeared she was acquiring language—much later than would be predicted by critical period hypotheses that had been posited at the time (Fromkin et al., 1974). Genie managed to amass an impressive vocabulary in a relatively short amount of time. However, she never developed a mastery of the grammatical aspects of language (Curtiss, 1981). Perhaps being deprived of the opportunity to learn language during a critical period impeded Genie’s ability to fully acquire and use language.

You may recall that each language has its own set of phonemes that are used to generate morphemes, words, and so on. Babies can discriminate among the sounds that make up a language (for example, they can tell the difference between the “s” in vision and the “ss” in fission); early on, they can differentiate between the sounds of all human languages, even those that do not occur in the languages that are used in their environments. However, by the time that they are about 1 year old, they can only discriminate among those phonemes that are used in the language or languages in their environments (Jensen, 2011; Werker & Lalonde, 1988; Werker & Tees, 1984).

After the first few months of life, babies enter what is known as the babbling stage, during which time they tend to produce single syllables that are repeated over and over. As time passes, more variations appear in the syllables that they produce. During this time, it is unlikely that the babies are trying to communicate; they are just as likely to babble when they are alone as when they are with their caregivers (Fernández & Cairns, 2011). Interestingly, babies who are raised in environments in which sign language is used will also begin to show babbling in the gestures of their hands during this stage (Petitto, Holowka, Sergio, Levy, & Ostry, 2004).

Generally, a child’s first word is uttered sometime between the ages of 1 year to 18 months, and for the next few months, the child will remain in the “one word” stage of language development. During this time, children know a number of words, but they only produce one-word utterances. The child’s early vocabulary is limited to familiar objects or events, often nouns. Although children in this stage only make one-word utterances, these words often carry larger meaning (Fernández & Cairns, 2011). So, for example, a child saying “cookie” could be identifying a cookie or asking for a cookie.

As a child’s lexicon grows, she begins to utter simple sentences and to acquire new vocabulary at a very rapid pace. In addition, children begin to demonstrate a clear understanding of the specific rules (grammar and semantics) that apply to their language(s). Even the mistakes that children sometimes make provide evidence of just how much they understand about those rules. This is sometimes seen in the form of overgeneralization. In this context, overgeneralization refers to an extension of a language rule to an exception to the rule. For example, in English, it is usually the case that an “s” is added to the end of a word to indicate plurality. For example, we speak of one dog versus two dogs. Young children will overgeneralize this rule to cases that are exceptions to the “add an s to the end of the word” rule and say things like “those two gooses” or “three mouses.” Clearly, the rules of the language are understood, even if the exceptions to the rules are still being learned (Moskowitz, 1978).

LANGUAGE AND THOUGHT

When we speak one language, we agree that words are representations of ideas, people, places, and events. The given language that children learn is connected to their culture and surroundings. But can words themselves shape the way we think about things? Psychologists have long investigated the question of whether language shapes thoughts and actions, or whether our thoughts and beliefs shape our language. Two researchers, Edward Sapir and Benjamin Lee Whorf, began this investigation in the 1940s. They wanted to understand how the language habits of a community encourage members of that community to interpret language in a particular manner (Sapir, 1941/1964). Sapir and Whorf proposed that language determines thought, suggesting, for example, that a person whose community language did not have past-tense verbs would be challenged to think about the past (Whorf, 1956). Researchers have since identified this view as too absolute, pointing out a lack of empiricism behind what Sapir and Whorf proposed (Abler, 2013; Boroditsky, 2011; van Troyer, 1994). Today, psychologists continue to study and debate the relationship between language and thought.

COGNITIVE PROCESSING OF LANGUAGE – EEG AND ERP

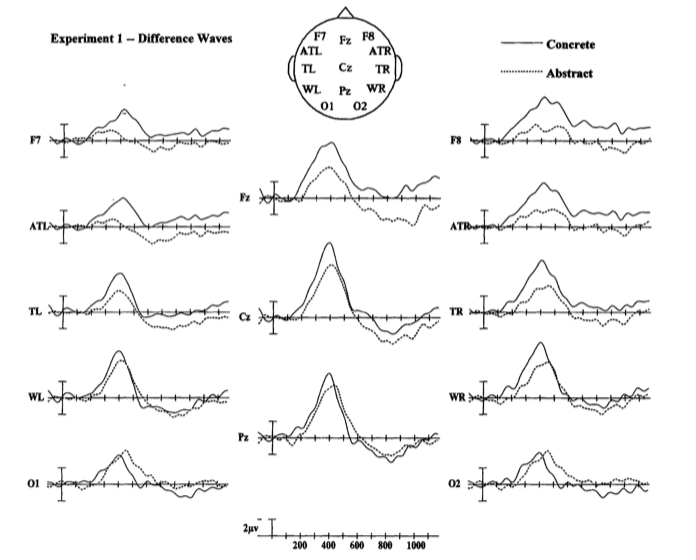

Modern advancements in electrical recording systems and computer science have lead to advanced cognitive neuroscientific techniques such as electroencephalography (EEG) that allows researchers to measure electrical brain activity in real time while participants perform language task. This allows for further evidence for models of language that describe the timeline of cognitive processing from sensory perception to meaning interpretation and response production. Specifically, EEG data can be broken down to be analyzed in two different ways known as spectral analysis and event related potentials (ERP) which both compliment each other in terms of providing evidence the other method may lack. In the case of spectral analysis, patterns of specific electrical frequency bands can be viewed over periods of time from fractions of a second to minutes or hours in the case of many sleep studies that incorporate EEG data recording. Changes in power (defined as the change in squared magnitude of electrical activity at the given frequency band) has been a well established practice in electrophysiological research and many changes of spectral activity over the period of a second and wider time windows have been documented as being related to specific behavioral patterns (Jung et al., 1997; Klimesch et al., 1998; Aftanas & Golocheikine, 2001). Spectral analysis can be additionally be used to benefit the event related potential technique (ERP) of EEG data analysis in which the EEG data is analyzed on a much smaller time scale. Typically within ERP analysis, the electrical activity of the brain in response to a stimulus is evaluated on a millisecond or smaller scale which allows for sub-conscious mechanisms such as the processing of language to be tracked in order to identify specific components (electrical inflections that are thought to be related to specific cognitive activities) that represent different stages of language processing. ERP components are usually, but not always as in the case of the contingent negative variation (CNV) and lateral readiness potential (LRP), labeled as acronyms with a letter indicating a positive or negative deflection and a number representing the time elapsed (ms) from the presentation of a stimulus differences between conditions tend to occur. Examples of ERP components include early visual processing components such as the P100 and the N100 occurring around 100 ms after the presentation of a stimulus related to early sensory processing (Hillyard, Vogel & Luck, 1998; Luck, Woodman & Vogel, 2000), the P300 referring to a positive inflection that tends to occur between 250 to 400 ms after the presentation of a stimulus (auditory or visual) related to infrequent or unpredictable stimuli as well as other processes (Squires, Squires & Hillyard, 1975; Polich, 2012), and the N400 commonly studied in terms of language semantics occurring around 400 ms after the presentation of a word which has been found to appear when words are presented that are incongruent with what is expected to be presented in the context of a sentence (Swaab et al., 2012).

Related to the processing of meaning expectations, Lee and Federmeier (2009) compared the effects of syntactic and semantic cues used in sentences while recording EEG in order to determine whether processing consequences of word ambiguity are qualitatively different in the presence of syntactic or semantic constraints on sentence meaning. In order to make these comparisons, they evaluated ERP responses for noun-verb homographs (words of the same spelling having different meanings) at the end of sentences where the final word made sense for both syntax and semantics (After walking around on her infected foot, she now had a boil) compared to sentences that make syntactic sense (sentence structure) but do not make semantic sense (After trying around on her important jury, she now had a boil). ERP responses to sentence final noun-verb homographs and unambiguous words in the syntacticly congruent only or syntactic and semantically congruent sentences indicated early perceptual components were the same across conditions, but a centro-posteriorly located N400 component that appeared to be notably reduced in conditions of syntactic and semantic congruency. N400 amplitudes to final words in the congruent sentences were overall reduced relative to those in the syntactic only congruence suggesting less ambiguity resolution in conditions where syntax and semantics were congruent with the final word as reflected by the reduced negative amplitude in syntax and semantically congruent conditions at 400 ms after the final word was presented.

Figure 7.01. Grand average ERPs to ambiguous words (dashed line) and unambiguous words (solid line) are plotted separately for syntactic prose sentences (left panel) and congruent sentences (right panel) at 8 representative electrode sites. Positions of the plotted sites are indicated by filled circles on the center head diagram (nose at top). Negative is plotted up (common in EEG literature) for this figure. In the syntax incongruent sentences, the response to ambiguous words (e.g. ‘the season/to season’) is more negative than the response to unambiguous words (e.g. ‘the logic/to eat’) over the frontal channels, between about 200 and 700 ms post-stimulus-onset. In the congruent sentences, there is no enhanced frontal negativity. Instead, the ambiguous words are more negative over central/posterior sites in the N400 time window (250-500ms). Adapted from Lee and Federmeier, 2009).

THE MEANING OF LANGUAGE

Think about what you know of other languages; perhaps you even speak multiple languages. Imagine for a moment that your closest friend fluently speaks more than one language. Do you think that friend thinks differently, depending on which language is being spoken? You may know a few words that are not translatable from their original language into English. For example, the Portuguese word saudade originated during the 15th century, when Portuguese sailors left home to explore the seas and travel to Africa or Asia. Those left behind described the emptiness and fondness they felt as saudade (figure below). The word came to express many meanings, including loss, nostalgia, yearning, warm memories, and hope. There is no single word in English that includes all of those emotions in a single description. Do words such as saudade indicate that different languages produce different patterns of thought in people? What do you think??

These two works of art depict saudade. (a) Saudade de Nápoles, which is translated into “missing Naples,” was painted by Bertha Worms in 1895. (b) Almeida Júnior painted Saudade in 1899.

These two works of art depict saudade. (a) Saudade de Nápoles, which is translated into “missing Naples,” was painted by Bertha Worms in 1895. (b) Almeida Júnior painted Saudade in 1899.

Language may indeed influence the way that we think, an idea known as linguistic determinism. One recent demonstration of this phenomenon involved differences in the way that English and Mandarin Chinese speakers talk and think about time. English speakers tend to talk about time using terms that describe changes along a horizontal dimension, for example, saying something like “I’m running behind schedule” or “Don’t get ahead of yourself.” While Mandarin Chinese speakers also describe time in horizontal terms, it is not uncommon to also use terms associated with a vertical arrangement. For example, the past might be described as being “up” and the future as being “down.” It turns out that these differences in language translate into differences in performance on cognitive tests designed to measure how quickly an individual can recognize temporal relationships. Specifically, when given a series of tasks with vertical priming, Mandarin Chinese speakers were faster at recognizing temporal relationships between months. Indeed, Boroditsky (2001) sees these results as suggesting that “habits in language encourage habits in thought” (p. 12).

One group of researchers who wanted to investigate how language influences thought compared how English speakers and the Dani people of Papua New Guinea think and speak about color. The Dani have two words for color: one word for light and one word for dark. In contrast, the English language has 11 color words. Researchers hypothesized that the number of color terms could limit the ways that the Dani people conceptualized color. However, the Dani were able to distinguish colors with the same ability as English speakers, despite having fewer words at their disposal (Berlin & Kay, 1969). A recent review of research aimed at determining how language might affect something like color perception suggests that language can influence perceptual phenomena, especially in the left hemisphere of the brain. You may recall from earlier chapters that the left hemisphere is associated with language for most people. However, the right (less linguistic hemisphere) of the brain is less affected by linguistic influences on perception (Regier & Kay, 2009)

SUMMARY

Language is a communication system that has both a lexicon and a system of grammar. Language acquisition occurs naturally and effortlessly during the early stages of life, and this acquisition occurs in a predictable sequence for individuals around the world. Behaviorists such as B.F. Skinner suggested language is a learned process we obtain through years of reinforcement, whereas linguists such as Noam Chompsky and cognitive psychologists tend to believe language may be an innate process who’s mechanisms we are born with. Language has a strong influence on thought, and the concept of how language may influence cognition remains an area of study and debate in psychology.

References:

Openstax Psychology text by Kathryn Dumper, William Jenkins, Arlene Lacombe, Marilyn Lovett and Marion Perlmutter licensed under CC BY v4.0. https://openstax.org/details/books/psychology

-

Exercises

Review Questions:

1. ________ provides general principles for organizing words into meaningful sentences.

a. Linguistic determinism

b. Lexicon

c. Semantics

d. Syntax

2. ________ are the smallest unit of language that carry meaning.

a. Lexicon

b. Phonemes

c. Morphemes

d. Syntax

3. The meaning of words and phrases is determined by applying the rules of ________.

a. lexicon

b. phonemes

c. overgeneralization

d. semantics

4. ________ is (are) the basic sound units of a spoken language.

a. Syntax

b. Phonemes

c. Morphemes

d. Grammar

Critical Thinking Questions:

1. How do words not only represent our thoughts but also represent our values?

2. How could grammatical errors actually be indicative of language acquisition in children?

3. How do words not only represent our thoughts but also represent our values?

Personal Application Question:

1. Can you think of examples of how language affects cognition?

Glossary:

grammar

language

lexicon

morpheme

overgeneralization

phoneme

semantics

syntax

Answers to Exercises

Review Questions:

1. D

2. C

3. D

4. B

Critical Thinking Questions:

1. People tend to talk about the things that are important to them or the things they think about the most. What we talk about, therefore, is a reflection of our values.

2. People tend to talk about the things that are important to them or the things they think about the most. What we talk about, therefore, is a reflection of our values.

3. Grammatical errors that involve overgeneralization of specific rules of a given language indicate that the child recognizes the rule, even if he or she doesn’t recognize all of the subtleties or exceptions involved in the rule’s application.

Glossary:

grammar: set of rules that are used to convey meaning through the use of a lexicon

language: communication system that involves using words to transmit information from one individual to another

lexicon: the words of a given language

morpheme: smallest unit of language that conveys some type of meaning

overgeneralization: extension of a rule that exists in a given language to an exception to the rule

phoneme: basic sound unit of a given language

semantics: process by which we derive meaning from morphemes and words

syntax: manner by which words are organized into sentences