5.4 Hearing

Learning Objectives

By the end of this section, you will be able to:

- Describe the basic anatomy and function of the auditory system

- Explain how we encode and perceive pitch

- Discuss how we localize sound

Our auditory system converts pressure waves into meaningful units of sound. This sensations of vibration allows us to hear the sounds of nature, appreciate the beauty of music, and to communicate with one another through spoken language. This section will provide an overview of the basic anatomy and function of the auditory system. It will include a discussion of how the sensory stimulus is translated into neural impulses, where in the brain that information is processed, how we perceive pitch, and how we know where sound is coming from.

ANATOMY OF THE AUDITORY SYSTEM

The ear can be separated into multiple sections. The outer ear includes the pinna, which is the visible part of the ear that protrudes from our heads, the auditory canal, and the tympanic membrane, or eardrum. The middle ear contains three tiny bones known as the ossicles, which are named the malleus (or hammer), incus (or anvil), and the stapes (or stirrup). The inner ear contains the semi-circular canals, which are involved in balance and movement (the vestibular sense), and the cochlea. The cochlea is a fluid-filled, snail-shaped structure that contains the sensory receptor cells (hair cells) of the auditory system (figure below).

The ear is divided into outer (pinna and tympanic membrane), middle (the three ossicles: malleus, incus, and stapes), and inner (cochlea and basilar membrane) divisions.

Sound waves travel along the auditory canal and strike the tympanic membrane, causing it to vibrate. This vibration results in movement of the three ossicles. As the ossicles move, the stapes presses into a thin membrane of the cochlea known as the oval window. As the stapes presses into the oval window, the fluid inside the cochlea begins to move, which in turn stimulates hair cells known as cilia, which are auditory receptor cells of the inner ear embedded in the basilar membrane. The basilar membrane is a thin strip of tissue within the cochlea that houses the cilia which allow the component pieces of the sound to be broken down into different frequencies.

CILIA AND THE PERCEPTION OF PITCH

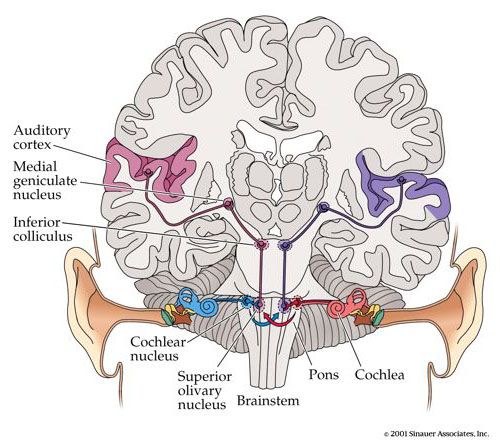

The activation of cilia is a mechanical process in that stimulation of the hair cell occurs when the hair cell is bent in response to a frequency of signal from structures of the middle ear causing a chemical reaction that triggers electrical action potentials for further processing of the auditory information in the brain. Auditory information is transmitted via the auditory nerve to the inferior colliculus (upper sections of the brainstem), the medial geniculate nucleus of the thalamus, and finally to the auditory cortex in the temporal lobe of the brain for processing. Like the visual system, there is also evidence suggesting that information about auditory recognition and localization is processed in parallel streams (Rauschecker & Tian, 2000; Renier et al., 2009).

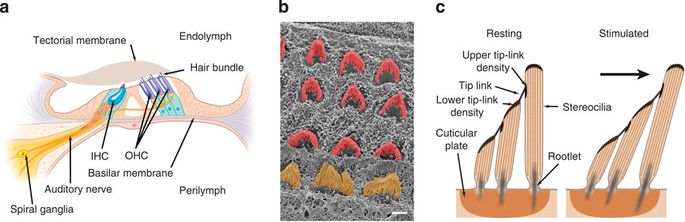

The organ of Corti. (a) cross-section of the organ of corti pointing out the salient features relevant to hearing transduction. Hair bundles on the apical surface of inner hair cells (iHcs) and outer hair cells (oHcs) are bathed in endolymph, whereas the basolateral side of hair cells is bathed in perilymph. (b) scanning electron microscopy image looking at the apical surface of hair cells with the tectorial membrane removed. iHc and oHc hair bundles are pseudo-coloured orange and red, respectively. scale bar, 2 μm. (c) enlargment of a schematic of the hair bundle and hair cell apical surface seen in a. salient features of stereocilia rows that comprise the hair bundle are indicated. When hair bundles are stimulated, the stereocilia are sheared towards the tallest row of stereocilia, this is also defined as the positive direction of stimulation (from Pang, Salles, Pan & Ricci, 2011).

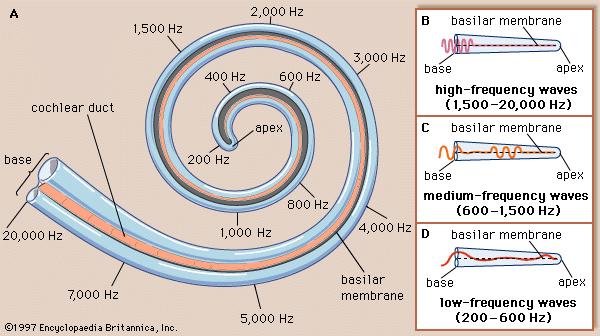

Frequency organization of the cochlea. A shows activation that occurs at each frequency. B,C and D show the cochlea unraveled and illustrate how high, medium and low frequencies propagate respectively across the basilar membrane (adapted from Encyclopedia Britannica, 1997).

Several theories have been proposed to account for pitch perception. We’ll briefly discuss three of them here: temporal theory, volley theory and place theory. The temporal theory of pitch perception asserts that frequency is coded by the activity level of a sensory neuron and that the firing rate of cilia or groups of cilia encode constant pitch perception. This entails a given hair cell or group of hair cells sends action potentials related to the frequency of the sound wave. At high amplitudes (loud sounds) temporal theory suggests that even when large groups of cilia are firing (sending action potentials) there is a periodicity to the firing, which corresponds to the periodicity of the auditory stimulus (Javel & Mott, 1988). Neurons also have a maximum firing frequency that exists between the frequencies humans are able to perceive, therefore in order to completely explain pitch perception, temporal theory must somehow explain how we are able to perceive pitches above the maximum firing rate of the neurons that encode the signal (Shamma, 2001). In response to this, volley theory describes firing patters of groups of neurons that fire in and out of phase in order to create coding for firing rates above what would be possible for a single neuron. Ernest Wever and Charles Bray, in the 1930s, proposed that neurons could fire in a volley and summate in frequency to recreate the frequency of the original sound stimulus (Wever & Bray, 1937). However because later studies determined phase synchrony is only able to code up to 10,000 Hz, volley theory is also not able to account for all the sounds we are able to hear (Goldstein, 1973). Place theory on the other hand suggests that the basilar membrane of the cochlea has specific sensitive areas where the cilia trigger action potentials for different frequencies of sound.

Contrary to temporal and volley theories, Hermann von Helmholtz proposed (though it was many accepted this theory before Helmholtz time) the place model of auditory transmission that suggests our perception of pitch is created by different places on the basilar membrane being activated depending on the frequency of sound (Barnes, 1897). Place theory allows for a description of extremely high frequencies as well as discriminating sound of many types and frequencies at the same time. Additionally, experiments using cochlear implants in order to control for overall vibration of the cochlea during normal experience of loud mixtures of sound, have demonstrated at low stimulation rates, ratings of pitch were proportional to the log of rate of stimulation and also decreased with distance from the round window (middle ear). At higher rates of stimulation, effects of rates of firing were weak, whereas effects of place along the basilar membrane were strong suggesting that hearing follows rules of temporal and volley theory below 1000 Hz and place theory encodes frequencies above 5000 Hz. Frequencies between 1000 and 5000 may utilize all three modes of encoding to encode for both the basilar membrane location as well as the neuron and group firing rates (Fearn, Carter & Wolfe, 1999). Further research has demonstrated similar findings suggesting both the rate of action potentials and place contribute to our perception of pitch. However, frequencies above 5000 Hz can only be encoded using place cues (Shamma, 2001).

SOUND LOCALIZATION

The ability to locate sound in our environments is an important part of hearing. Localizing sound could be considered similar to the way that we perceive depth in our visual fields. Like the monocular and binocular cues that provided information about depth, the auditory system uses both monaural (one-eared) and binaural (two-eared) cues to localize sound.

Each pinna interacts with incoming sound waves differently, depending on the sound’s source relative to our bodies. This interaction provides a monaural cue that is helpful in locating sounds that occur above or below and in front or behind us. The sound waves received by your two ears from sounds that come from directly above, below, in front, or behind you would be identical; therefore, monaural cues are essential (Grothe, Pecka, & McAlpine, 2010).

Binaural cues, on the other hand, provide information on the location of a sound along a horizontal axis by relying on differences in patterns of vibration of the eardrum between our two ears. If a sound comes from an off-center location, it creates two types of binaural cues: interaural level differences and interaural timing differences. Interaural level difference refers to the fact that a sound coming from the right side of your body is more intense at your right ear than at your left ear because of the attenuation of the sound wave as it passes through your head. Interaural timing difference refers to the small difference in the time at which a given sound wave arrives at each ear (figure below). Certain brain areas monitor these differences to construct where along a horizontal axis a sound originates (Grothe et al., 2010).

Localizing sound involves the use of both monaural and binaural cues. (credit “plane”: modification of work by Max Pfandl)

In humans, the maximal intramural time difference is about 600µs indicating transduction of the same sound for both ears occurs below this limit. In mammals signals sent through the auditory nerve from the cochlea eventual arrive at the superior olivary complex, of nuclei located mainly in the pons of the brainstem but also extends through the medulla. The superior olivary complex receive projections predominantly from the anteroventral cochlear nucleus (AVCN) via the trapezoid body (Also in the brainstem) and is the first major site of convergence of auditory information from the left and right ears (Oliver, Beckius & Shneiderman, 1995). Within the superior olivary complex, The medial superior olive is thought to help locate the azimuth of a sound, that is, the angle to the left or right where the sound source is located. Sound elevation cues are not processed in the olivary complex. Cells of the dorsal cochlear nucleus (DCN) that are thought to contribute to localization in elevation, bypass the superior olivary complex and project directly to the inferior colliculus where the signal is further processed. Only horizontal data is processed in the superior olivary complex, but it does come from two different ear sources, which aids in the localizing of sound on the azimuth axis (Huspeth, Jessel, Kandel, Schwartz & Sieglebaum, 2013). Information transmitted to the inferior colliculus in the upper (dorsal) portion of the brainstem is then sent to the primary auditory cortex in the temporal lobe where the final stages of classification, categorization and understanding of sound is created.

Basic schematic of the human auditory system including brainstem structure and pathways leading to the primary auditory cortex of the temporal lobe. (Adapted from Purves, Augustine, Fitzpatrick, Katz, Lamantia, MacNamara & Williams, 2001)

Although we are pretty good at detecting the angular location of sound in the environment, the human auditory system has only a limited ability to detect distance based on sound processed in the brain. In some cases the level of sounds allows for a discrimination between close and far as in when someone is whispering compared to when someone is speaking or yelling from far away. In other less obvious situations, the auditory system uses six strategies to discriminate differences in sound distances including direct/reflection ratio, loudness, sound spectrum, initial time delay gap (ITDG), movement, and level of difference. In enclosed situations, the auditory system is able to use the ratio between direct sound from the source and reflected sound off walls or other objects in order to estimate sound. Loudness is fairly obvious in that distance sources tend to have lower loudness, and sound spectrum estimates distance by how muffled a sound is when it reaches the ear. High frequencies are dampened faster by air compared to low frequencies, therefore a distant source may sound more muffled compared to a close source because high frequencies are attenuated faster as it move through the air. ITDG describes the time difference between the arrival of the direct wave and the reflection of the first strong reflection at the listener. Near sources create larger ITDG with the first reflection taking longer to react the ears compared to more distant sources where direct and reflected sound waves have similar path lengths. Movement refers to a concept also important for visual perception called motion parallax referring to closer objects moving faster past the person perceiving the sound compared to sources far away (similar to the doppler effect). The motion parallax provides an additional analysis of auditory information that provides important information in estimating the source of a sound as well as other characteristics such as size (Yost, 2018). Finally the level of difference refers to closer objects creating a greater difference in level of information between the two ears compared to a sound source that is farther away (theses differences are obvious so small that we are not able to consciously perceive the differences themselves).

HEARING LOSS

Deafness is the partial or complete inability to hear. Some people are born deaf, which is known as congenital deafness. Many others begin to suffer from conductive hearing loss because of age, genetic predisposition, or environmental effects, including exposure to extreme noise (noise-induced hearing loss, as shown in figure below), certain illnesses (such as measles or mumps), or damage due to toxins (such as those found in certain solvents and metals).

Environmental factors that can lead to conductive hearing loss include regular exposure to loud music or construction equipment. (a) Rock musicians and (b) construction workers are at risk for this type of hearing loss. (credit a: modification of work by Kenny Sun; credit b: modification of work by Nick Allen)

Environmental factors that can lead to conductive hearing loss include regular exposure to loud music or construction equipment. (a) Rock musicians and (b) construction workers are at risk for this type of hearing loss. (credit a: modification of work by Kenny Sun; credit b: modification of work by Nick Allen)

Given the mechanical nature by which the sound wave stimulus is transmitted from the eardrum through the ossicles to the oval window of the cochlea, some degree of hearing loss is inevitable. With conductive hearing loss, hearing problems are associated with a failure in the vibration of the eardrum and/or movement of the ossicles. These problems are often dealt with through devices like hearing aids that amplify incoming sound waves to make vibration of the eardrum and movement of the ossicles more likely to occur.

Hearing problems associated with a failure to transmit neural signals from the cochlea to the brain are referred to as sensorineural hearing loss. Ménière’s disease refers to sensorineural hearing loss which results in a degeneration of inner ear structures that can lead to hearing loss, tinnitus (constant ringing or buzzing), vertigo (a sense of spinning), and an increase in pressure within the inner ear (Semaan & Megerian, 2011). This kind of loss cannot be treated with hearing aids, but some individuals can be treated with cochlear implants. Cochlear implants are electronic devices that consist of a microphone, a speech processor, and an electrode array that stimulate specific parts of the inner ear that transducing . The device receives incoming sound information and directly stimulates the auditory nerve to transmit information to the brain. Cochlear implants have not only aided individuals who had previously been hearing impaired, but these have also lead to new understandings of cochlear function and organizational processing of sound as discussed above in terms of the temporal, volley, and place theories of pitch perception (Moore, 2003).

Deaf Culture

In the United States and other places around the world, deaf people have their own language, schools, and customs. In the United States, deaf individuals often communicate using American Sign Language (ASL); ASL has no verbal component and is based entirely on visual signs and gestures. The primary mode of communication is signing. One of the values of deaf culture is to continue traditions like using sign language rather than teaching deaf children to try to speak, read lips, or have cochlear implant surgery.

When a child is diagnosed as deaf, parents have difficult decisions to make. Should the child be enrolled in mainstream schools and taught to verbalize and read lips? Or should the child be sent to a school for deaf children to learn ASL and have significant exposure to deaf culture? Do you think there might be differences in the way that parents approach these decisions depending on whether or not they are also deaf? Fortunately modern technology has provided many resources and groups that have been organized to create and support new tools and media forms that assist deaf individuals with anything from finding education opportunities to employment and general communication techniques (National Association of the Deaf, World Federation of the Deaf, Registry of Interpreters for the Deaf, Libraries, Media, and Archives: American Sign Language and the Alexander Graham Bell Association for the Deaf and Hard of Hearing).

Individuals who are deaf and embrace deaf culture tend to consider their lack of hearing as a difference in human experience compared to a disability (Lane, Pillard & Hedward, 2011). There are over 200 different variations of sign language across the world which include 114 sign languages listed in the Ethnologue database and 157 more sign languages, systems of communication, and dialects (Lewis & Simons, 2009). Today many universities and schools exists across the world designed for deaf individuals, one of which Gallaudet University in Washington D.C., the only deaf liberal arts University in the United States (founded in 1876) houses within its library the larges collection of deaf related materials, including 234,000 books and other materials in different formats.

SUMMARY

Sound waves are funneled into the auditory canal and cause vibrations of the eardrum; these vibrations move the ossicles. As the ossicles move, the stapes presses against the oval window of the cochlea, which causes fluid inside the cochlea to move. As a result, hair cells embedded in the basilar membrane become bent and sway like a tree in the wind, which sends neural impulses to the brain via the auditory nerve. From the auditory nerve, signals are sent to the superior olivary nuclei in the brainstem and then on to the inferior colliculus in the upper (dorsal) portions of the brainstem. From the inferior colliculus, signals are sent to the medial geniculate nucleus of the thalamus where the signal is transmitted to the primary auditory cortex in the temporal lobe.

Pitch perception and sound localization are important aspects of hearing. Our ability to perceive pitch relies on both the firing rate of the hair cells in the basilar membrane as well as their location within the membrane. In terms of sound localization, both monaural and binaural cues are used to locate where sounds originate in our environment. However our ability to estimate position in space where sound originates is limited in the ability to estimate distance from the source of sound. In order to make the best estimate possible on how far away we are from a sound source, the auditory system uses at least six different strategies including the direct/reflection ratio, loudness, sound spectrum, initial time delay gap (ITDG), movement, and level of difference. Motion parallax refers to a type of depth perception cue in which objects that are closer appear to move faster than objects that are farther away. This monaural cue similar to cues used in the visual system provide further analysis of auditory information which estimates distance and other characteristics of a sound source.

Individuals can be born deaf, or they can develop deafness as a result of age, genetic predisposition, and/or environmental causes. Hearing loss that results from a failure of the vibration of the eardrum or the resultant movement of the ossicles is called conductive hearing loss. Hearing loss that involves a failure of the transmission of auditory nerve impulses to the brain is called sensorineural hearing loss.

References:

Openstax Psychology text by Kathryn Dumper, William Jenkins, Arlene Lacombe, Marilyn Lovett and Marion Perlmutter licensed under CC BY v4.0. https://openstax.org/details/books/psychology

Exercises

Review Questions:

1. Hair cells located near the base of the basilar membrane respond best to ________ sounds.

a. low-frequency

b. high-frequency

c. low-amplitude

d. high-amplitude

2. The three ossicles of the middle ear are known as ________.

a. malleus, incus, and stapes

b. hammer, anvil, and stirrup

c. pinna, cochlea, and utricle

d. both a and b

3. Hearing aids might be effective for treating ________.

a. Ménière’s disease

b. sensorineural hearing loss

c. conductive hearing loss

d. interaural time differences

4. Cues that require two ears are referred to as ________ cues.

a. monocular

b. monaural

c. binocular

d. binaural

Critical Thinking Question:

1. Given what you’ve read about sound localization, from an evolutionary perspective, how does sound localization facilitate survival?

2. How can temporal and place theories both be used to explain our ability to perceive the pitch of sound waves with frequencies up to 4000 Hz?

Personal Application Question:

1. If you had to choose to lose either your vision or your hearing, which would you choose and why?

Glossary:

basilar membrane

binaural cue

cochlea

cochlear implant

conductive hearing loss

congenital deafness

deafness

hair cell

incus

interaural level difference

interaural timing difference

malleus

Ménière’s disease

monaural cue

pinna

place theory of pitch perception

sensorineural hearing loss

stapes

temporal theory of pitch perception

tympanic membrane

vertigo

Answers to Exercises

Review Questions:

1. B

2. D

3. C

4. D

Critical Thinking Question:

1. Sound localization would have allowed early humans to locate prey and protect themselves from predators.

2. Pitch of sounds below this threshold could be encoded by the combination of the place and firing rate of stimulated hair cells. So, in general, hair cells located near the tip of the basilar membrane would signal that we’re dealing with a lower-pitched sound. However, differences in firing rates of hair cells within this location could allow for fine discrimination between low-, medium-, and high-pitch sounds within the larger low-pitch context.

Glossary:

basilar membrane: thin strip of tissue within the cochlea that contains the hair cells which serve as the sensory receptors for the auditory system

binaural cue: two-eared cue to localize sound

cochlea: fluid-filled, snail-shaped structure that contains the sensory receptor cells of the auditory system

cochlear implant: electronic device that consists of a microphone, a speech processor, and an electrode array to directly stimulate the auditory nerve to transmit information to the brain

conductive hearing loss: failure in the vibration of the eardrum and/or movement of the ossicles

congenital deafness: deafness from birth

deafness: partial or complete inability to hear

hair cell: auditory receptor cell of the inner ear

incus: middle ear ossicle; also known as the anvil

interaural level difference: sound coming from one side of the body is more intense at the closest ear because of the attenuation of the sound wave as it passes through the head

interaural timing difference: small difference in the time at which a given sound wave arrives at each ear

malleus: middle ear ossicle; also known as the hammer

Ménière’s disease: results in a degeneration of inner ear structures that can lead to hearing loss, tinnitus, vertigo, and an increase in pressure within the inner ear

monaural cue: one-eared cue to localize sound

pinna: visible part of the ear that protrudes from the head

place theory of pitch perception: different portions of the basilar membrane are sensitive to sounds of different frequencies

sensorineural hearing loss: failure to transmit neural signals from the cochlea to the brain

stapes: middle ear ossicle; also known as the stirrup

temporal theory of pitch perception: sound’s frequency is coded by the activity level of a sensory neuron

tympanic membrane: eardrum

vertigo: spinning sensation