Listening and Speaking Through the Computer

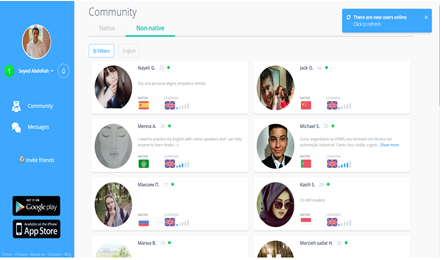

When learners are capable of interacting with more fluent speakers, they can use the computer as a conduit to native speakers and more advanced second language learners around the world. Today, advances in information technology have rendered intercultural communication easier than ever. High-speed Internet connections and different computer technologies can make computer-mediated communication (CMC) a reliable part of teaching and learning. The opportunities that social media websites and applications (such as WeChat, Facebook, Google+, Twitter, Instagram, and so on) provide can be used in classroom and non-classroom settings to enable students to interact with native and non-native English speakers via text, audio, and video chats synchronously and asynchronously. There are also a host of technologies which are designed for language learning. For instance, Speaky (http://www.speaky.com) is a multi-platform social networking application (web, iOS, and Android) that enables learners to practice more than 100 languages through intercultural communication via text and audio messages. The users create a profile (where they provide their first language, proficiency level in the language they aim to practice, birthday, and goals) and are paired with others who are native speakers of the language. Others interested in practicing the user’s native language can also send them commination requests. Some other current popular language exchange websites and applications include LingoGlobe (http://www.lingoglobe.com/), WeSpeke (http://en.wespeke.com/), Coffee Learning (https://coeffee.com/), and epals (http://www.epals.com). Of course, the same safety rules and etiquette as in real-life settings apply to these environments as well, and teachers and learners need to exercise caution when using these platforms. Figure 3.2 shows the interface of Speaky.

Figure 3.2. An example from Speaky community.

Virtual immersive environments (VIE), including the cave automatic virtual environment (CAVE), virtual reality (VR), and augmented (AR) reality technologies provide affordances for listening and speaking. What these technologies have in common is a tendency to create environments that support student immersion. Immersion is an old-age concept defined as “a psychological state characterized by perceiving oneself to be enveloped by, included in, and interacting with an environment that provides a continuous stream of stimuli and experiences” (Witmer & Singer, 1998, p. 227). With the technologies we have today, we can develop immersive environments that can potentially immerse students in the learning process. VIE, then, is the general term to refer to such environments, where VR experiences are created. CAVE is a room-bound setting with technologies such as computers and peripherals like projectors, depth cameras, and other sensors where a VR experience is created. For instance, the CAVE at Washington State University, called the Virtual Immersive Teaching and Learning Lab (VITaL), comprises a computer with peripherals, three projectors, three screens, one Microsoft Kinect camera (a motion sensor, see http://microsoft.com for more information), a Leap Motion Controller (a motion sensor, see https://www.leapmotion.com for more information), and an Xbox One gaming console. In VITaL, students can participate in Minecraft, dissect virtual frogs (https://www.youtube.com/watch?v=_lrjOc_iuyQ), design 3D models and print them using a 3D printer (https://www.youtube.com/watch?v=JFWWHuOfnNY), and many other things. There are technologies that can transform the room into a gaming platform (see Microsoft RoomAlive, https://goo.gl/mB6jVt) or create an animated 3D replica of an interlocutor at a distance (see Microsoft2Room, https://goo.gl/wxXTSy) right in the room.

VR headsets such as the Oculus Rift (https://www.oculus.com/rift/), HTC VIVE (https://www.vive.com), or Microsoft HoloLens (https://www.microsoft.com/en-us/hololens) also offer great affordances for immersion in head-mounted mobile technologies. AR programs and gear can, as the name suggests, add other important information to the learners’ experiences. For example, an iPad with the application 4D Anatomy (https://www.4danatomy.com/) can make a regular anatomic lesson handout alive with added multimedia information about the human body (see https://www.youtube.com/watch?v=ITEsxjnmvow). Educators can also make their own AR content using Metaverse (https://studio.gometa.io/). All these environments and technologies can provide affordances for speaking and listening through and around computers.

Virtual worlds (VWs), a form of VIEs, are high-fidelity 3D environments simulating real-world contexts and features (Kim, Lee, & Thomas, 2012). VWs provide engaging environments for synchronous and asynchronous listening and speaking practice. These worlds, such as Second Life (https://secondlife.com/); Active Words (https://www.activeworlds.com/); and Twinity (http://www.twinity.com/), are online communities of users interacting and manipulating objects in graphical three-dimensional (3D) environments (Bishop, 2009). Users can gather virtual characters (avatars) from around the world into different virtual social spaces such as shopping centers, schools, beaches, and restaurants, where they can engage in social interactions and activities with other members through different in-world communication tools such as text and audio chat. One popular form of VWs is massively multiplayer online games (MMOGs) such as Quest Atlantis Remixed (http://atlantisremixed.org/), and World of Warcraft (https://worldofwarcraft.com/). These worlds can make engaging tasks for listening and speaking. During gameplay, students can collaborate towards completing a teacher-assigned task by communicating over the in-game chat server or other third-party audio chat applications like Discord (https://discordapp.com/) or TeamSpeak (https://www.teamspeak.com/). As these games are organized around “quests,” they can be very engaging as the students actively collaborate, strategize, problem-solve, and communicate towards accomplishing communal goals.

Asynchronous means of communication can also be used in language classes, especially in cases with time differences, low Internet connectivity, and time needed for task preparation. Almost all synchronous applications allow for asynchronous messaging as well, but some applications like voice-based and video-based discussion boards as well as audio e-mail are asynchronous. An example of a multi-modal discussion board is VoiceThread (https://voicethread.com/). Through this application, educators and students can create multimedia discussion posts by integrating a variety of resources such as video and audio recordings, pictures, texts, and markups. Then, the other students can post their own multimedia response to the thread. Activities using both synchronous and asynchronous audio and video exchanges also include dialogue journals (see chapter 2 for more on written dialogue journals) in which two or more participants record messages and send them to each other in a running stream of conversation.

In addition, learners can practice speaking and listening through the computer by recording audio segments in word processing software such as Microsoft Word or in presentation software such as Microsoft PowerPoint. Students can even trade suggestions for essay revision in Microsoft Word through audio commenting capabilities, or use Google Docs (http://docs.google.com) or a screen capturing application such as Jing (https://www.techsmith.com/jing-tool.html) to provide each other with multimodal feedback. Finally, they can use an array of social networking applications such as WhatsApp (https://www.whatsapp.com/), Telegram (https://telegram.org/), and WeChat (https://web.wechat.com/) to send each other recorded audio and/or video messages or join groups where they can practice their listening and speaking. Again, a major benefit of this task structure is that learners can interact socially and receive authentic oral input from peers and others. They also have opportunities to use spoken language with a wide variety of listeners in an atmosphere that allows them to record and re-record until they are satisfied with their results. Moreover, as the exposure to input rises, so can language noticing through feedback resulting from multiple output channels of interaction with peers and other interlocutors. Also, as these activities can be designed to be authentic, meaningful, and feedback-laden, they can be engaging as well. In these ways, working through the computer enables learners to develop their skills in an environment supportive of language learning and task engagement principles.

Feedback/Errata